The European Union approved the first worldwide AI act, and it is taking a significant importance in the citizens daily lives. Nowadays, we are hearing about artificial intelligence everywhere. Whether at the Christmas table, on social media, or almost imperceptibly when we unlock our phone with our face. AI has arrived to stay, and although the concept has been trendy for a few years now, what many are unaware of is its origin in the 1950s. Since that moment, AI has kept on evolving, with significant advances during the 2000s in automatic learning and data processing capacity increase that has made possible global access.

What is AI means?

AI refers to computer systems created to perform tasks that typically require human intelligence, such as learning or reasoning. Regarding any situation, AI can reason and process information to make decisions in order to achieve a goal.

As technology advances, we can find AI in more and more fields and tools, to the point of needing regulation. We can find AI in such diverse fields impossible to imagine ten years ago: generating autonomously written content, coding in common programming languages, creating scripts and creative content, real-time tutorials, or even generating musical and artistic compositions, among many other activities. The widespread use of AI in all spheres has forced these intelligent models to comply with measures to ensure:

- transparency, to correct inappropriate practices.

- traceability, to track the evolution of systems.

- non-discrimination, to avoid unfair prejudice.

- measurement of environmental impact, to ensure environmental sustainability.

Different regulations according to risk

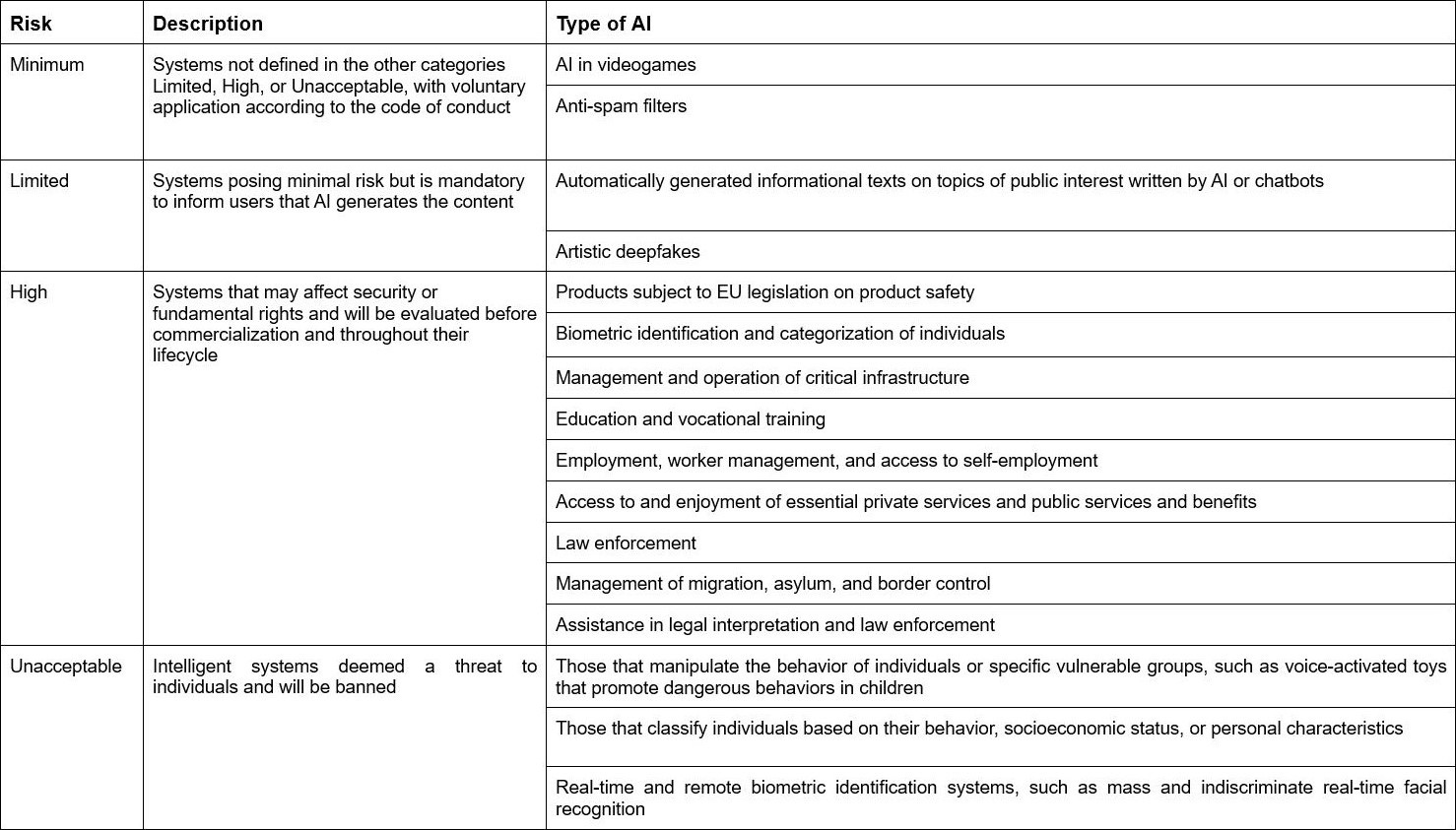

In May 2023, the European Union presented the first law proposal to rule the uses and scope of AI, which culminated in the approval of the world’s first AI act. This fundamental step had been under discussion since April 2021 when Brussels presented the original proposal at a time when technology had not reached the need to set limits yet. The new AI act will be overseen by experts and defined within a solid legal framework to ensure a neutral and longstanding definition to be applied in future AI advances. Failure to comply with these measures entails fines for companies that evade their obligations, which can reach €35 million or

Even so, the following risk levels have been established from lowest to highest:

Restrictions in the AI act for generative artificial intelligence

There is a section, regardless of the level of risk it poses, related to generative AI. This would have to comply with transparency requirements and could lead to some modifications in the system when the act comes into force.

One of the most well-known examples of generative AI, standed out last year, is OpenAI’s ChatGPT, also to be affected. This novelty would require the company to disclose the content has been generated by AI, design the model to prevent it from generating illegal content, or publish summaries of copyrighted data used for training.

Another system that may be affected is Microsoft’s new AI-based assistant, Copilot, which is already deployed in Windows 11. However, the Digital Markets Law published by the end of 2022, has had a decelerating effect in several European countries, anticipating the same with this new AI regulation.

Systems for deepfake detection and threats

Although it is necessary to regulate and inform about AI, the apps combination to avoid fraud might be the key. At Gradiant, along with Councilbox, we have been working in the alliance GICTEL to perfect anti-fraud systems and detect spoofing attacks. So far, the results have been very positive. It is true that deepfakes are becoming increasingly accurate and can be used for illicit purposes, but it is also true that we already have tools that allow us to protect ourselves from these threats. The solution is not to regret the consequences of such an attack, but to equip ourselves with the appropriate defense to prevent it from happening.

DAGIA can detect threats and incidents in ICT infrastructures, adapting the technologies of a SOC to provide users with simple and automatic cybersecurity management. At Gradiant we are working in AI and Process Mining models to analyze the data and obtain patterns of behavior, both for presence and absence of anomalies, providing metrics and alerts to the user for subsequent analysis and response.

Operation co-financed by the European Union through the Feder Galicia 2021-2027 Operational Program. Joint Research Unit for the development of various highly innovative technologies in the field of identity management and cybersecurity. FEDER, a way to make Europe.