The Rise of AI-Powered Digital Fraud

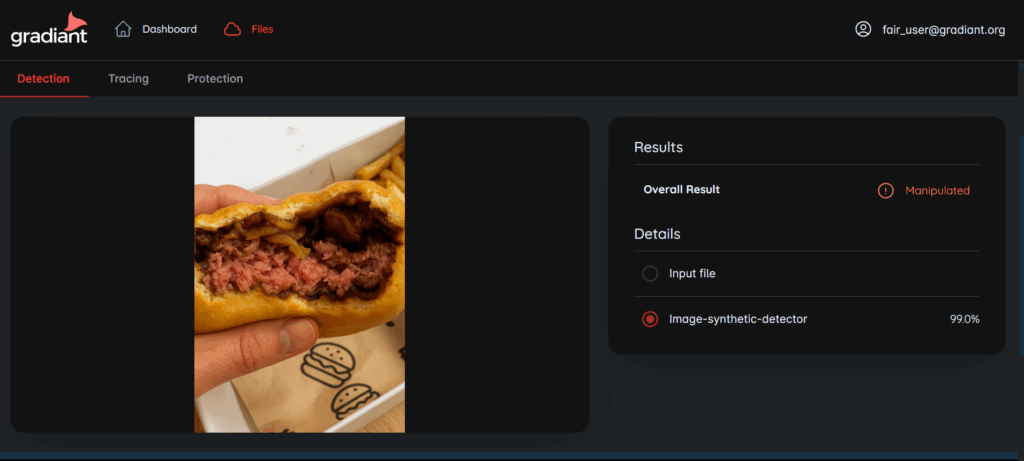

Image generated using ChatGPT

From claiming a food delivery refund with a fake photo to impersonating a CEO’s voice to steal millions artificial intelligence is shaping new forms of fraud that are as subtle as they are dangerous.

Imagine answering the door to receive your food delivery. Everything looks normal, and you’re about to enjoy your meal. That burger looks delicious. You might even leave a positive review. But some have discovered a new form of scam that’s spreading fast all thanks to generative AI. These fraudsters enjoy free meals by digitally manipulating a photo of the dish to make it appear spoiled. With AI tools like ChatGPT, a simple prompt like “make the meat look undercooked” can generate a realistic version in seconds. The altered image is then sent with a complaint through the app, and within minutes, a refund is issued.

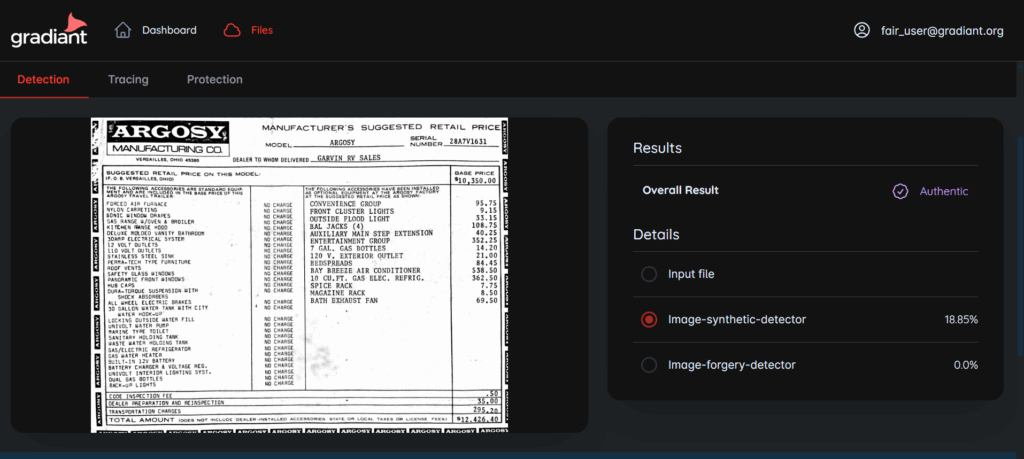

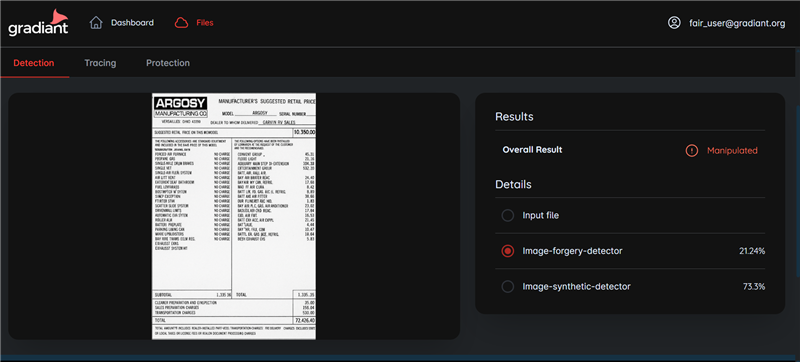

This “low-cost” fraud, known as a shallowfake, relies on minor yet highly convincing digital tweaks that bypass companies’ control systems. And it’s not limited to food apps: insurance companies, online retailers, and even public administrations are seeing a rise in fake claims using AI-modified images or documents invoices, contracts, you name it. Uber Eats has seen a surge in fraud since 2024. In the UK, insurers report a 300% rise in AI-manipulated claims since 2021. In Spain, AXA detects digital tampering in 30% of submitted claims, compared to just 3% a few years ago. Think about it: asking an AI to add a scratch to a car or alter an invoice is surprisingly easy and often undetectable.

Shallowfakes Are Just the Tip of the Iceberg

AI-driven fraud has reached far more sophisticated levels. Remember that example at the start a call from your bank or your boss asking for an urgent transfer? That convincing voice wasn’t real it was voice hacking using AI.

Voice hacking replicates a person’s voice to say something they never actually said. It uses voice cloning powered by AI and deep learning, analyzing previous recordings to build a model that mimics the speech patterns, tone, rhythm, and accent of the target.

Real-world cases are chilling. Women like Jennifer DeStefano and Ruth Card received phone calls that seemed to come from kidnapped relatives (a daughter and grandson). In the corporate world, the infamous “CEO scam” is increasingly common an employee gets a call from someone who sounds exactly like their boss, ordering a fund transfer. One notable case in Hong Kong saw fraudsters steal €23 million. This wasn’t just one call it was a full week of deepfake voice and video meetings with fake executives. Other scams have used AI-generated voices to sign contracts or create fake ads, like the one featuring Taylor Swift endorsing a set of cookware. The creativity of cybercriminals knows no bounds.

A Threat with Real-World Costs

This growing threat comes with a heavy price tag. According to Regula’s Deepfake Trends 2024 study, deepfake-related fraud costs organizations an average of $450,000. The financial services sector suffers the most — with average losses exceeding $603,000 per company.

Even more alarming: identity fraud costs have doubled in just two years, rising from $230,000 in 2022 to today’s figures. Fintech companies ($637,000 avg. loss) and traditional banks ($570,000) are the hardest hit, particularly by deepfake audio and video. Geographically, Mexico reported the highest losses ($627,000), followed by Singapore ($577,000) and the U.S. ($438,000).

Despite this, there’s a worrying disconnect: 56% of companies feel confident in their ability to detect deepfakes, yet only 6% have successfully avoided financial losses. This suggests many organizations are still unprepared for the sophistication of these threats.

fAIr: AI to Detect Synthetic Images, Videos, and Audio

But all is not lost. As cybercriminals grow more creative, so do the tools to fight back. Human eyes and ears can no longer be trusted to detect deepfakes we need advanced technology.

At Gradiant, we apply cutting-edge AI and multimedia forensics to tackle the deepfake challenge. With deep expertise in multimedia analysis, applied AI, security and privacy, communication systems, and language technologies, we’re building robust, forward-looking solutions.

From this foundation comes fAIr by Gradiant a platform that uses multimodal AI to detect and combat malicious uses of generative AI, identifying whether a video, image, or audio file has been manipulated or synthetically generated, and providing full traceability across messaging and social platforms. Thanks to this technology, we can even protect multimedia files against potential tampering, helping prevent their fraudulent or malicious use.

Multimodal Analysis

The strength of fAIr lies in its multimodal analysis approach, combining detection models across audio, image, and video for cross-validation. These AI models trained using innovative data selection, curation, and augmentation techniques are capable of identifying details invisible to the human eye. This solution could have helped prevent incidents like the videocall fraud in Hong Kong.

In short, while AI has opened the door to new forms of digital fraud, the same technology when applied responsibly and intelligently, as in fAIr becomes a key tool to verify authenticity, protect resources, and build trust in an increasingly complex digital world.

This publication is part of fAIr (Fight Fire with fAIr), funded by the European Union’s NextGenerationEU and Spain’s Recovery, Transformation and Resilience Plan (PRTR), through INCIBE.

The views and opinions expressed are solely those of the authors and do not necessarily reflect those of the European Union or the European Commission. Neither the EU nor the Commission is responsible for them.